The next thing I wanted to accomplish was being able to translate and rotate the body while keeping the feet planted. For now this will simple enable the robot to wiggle around and “dance”, but eventually these movements will be necessary inputs for traversing uneven terrain.

The concept for this is pretty straightforward. If i want the body to translate right while keeping the feet planted, you simply move all the feet to the left relative to the body:

newFootPositionX = initialFootPositionX - translateX

newFootPositionY = initialFootPositionY - translateY

newFootPositionZ = initialFootPositionZ - translateZ

I’m subtracting the translation because the feet are moving opposite the direction i want the body to go in. ‘translateY’ could be an input from your controller. I using the Bioloid Commander library for easy access to the Commander controller inputs.

Rotations aren’t quite as simple. The concept is to take an angular input, and provide the X,Y,Z offsets necessary for each leg to create that body rotation. A perfect job for the rotation matrix!

Rotation Matrices

Here is some good background info on rotation matrices. Its not nearly as hard as it looks. You simply apply one rotation matrix per axis of rotation. I wanted to be able to rotate on all three axis so i strung all three together.

(X,Y,Z)rotated = (X,Y,Z)initial*Rx*Ry*Rz

The angle pheta in each matrix is the input rotation angle for that axis. You do need some sort of software to crunch this up into closed form equations. I used Matlab which can do the symbolic matrix multiplication. Once I got my equations i was able to calculate my (X,Y,Z)rotated offsets and add them into the equations above to get:

newFootPositionX = initialFootPositionX - translateX - rotatedX

newFootPositionY = initialFootPositionY - translateY - rotatedY

newFootPositionZ = initialFootPositionZ - translateZ - rotatedZ

This *almost* works worked perfectly. The issue is that my corner legs were behaving funny. That i realized was because my math wasn’t taking into account their mounting angle. Basically each corner points 45 degrees out. I need to rotate all my coordinates for these legs and there’s no better tool than… the rotation matrix! This time its easier because this is a single axis rotation so there is only one matrix, Rz.

(X,Y,Z)coxaCorrected = (X,Y,Z)newFootPosition*Rz

Note that the 45deg input is the angle for the front right leg. The right rear leg would be 135deg, the left rear would be 225deg, and the upper left would be 315deg as you go around the clock.

Here’s the code:

/**********************************************************************************************************

fodyFK()

Calculates necessary foot position (leg space) to acheive commanded body rotations, translations, and gait inputs

***********************************************************************************************************/

void bodyFK(){

float sinRotX, cosRotX, sinRotY, cosRotY, sinRotZ, cosRotZ;

int totalX, totalY, totalZ;

int tempFootPosX[6], tempFootPosY[6], tempFootPosZ[6];

int bodyRotOffsetX[6], bodyRotOffsetY[6], bodyRotOffsetZ[6];

sinRotX = sin(radians(-commanderInput.bodyRotX));

cosRotX = cos(radians(-commanderInput.bodyRotX));

sinRotY = sin(radians(-commanderInput.bodyRotY));

cosRotY = cos(radians(-commanderInput.bodyRotY));

sinRotZ = sin(radians(-commanderInput.bodyRotZ));

cosRotZ = cos(radians(-commanderInput.bodyRotZ));

for( int legNum=0; legNum<6; legNum++){

totalX = leg[legNum].initialFootPos.x + leg[legNum].legBasePos.x;

totalY = leg[legNum].initialFootPos.y + leg[legNum].legBasePos.y;

totalZ = leg[legNum].initialFootPos.z + leg[legNum].legBasePos.z;

bodyRotOffsetX[legNum] = round(( totalY*cosRotY*sinRotZ + totalY*cosRotZ*sinRotX*sinRotY + totalX*cosRotZ*cosRotY - totalX*sinRotZ*sinRotX*sinRotY - totalZ*cosRotX*sinRotY) - totalX);

bodyRotOffsetY[legNum] = round( totalY*cosRotX*cosRotZ - totalX*cosRotX*sinRotZ + totalZ*sinRotX - totalY);

bodyRotOffsetZ[legNum] = round(( totalY*sinRotZ*sinRotY - totalY*cosRotZ*cosRotY*sinRotX + totalX*cosRotZ*sinRotY + totalX*cosRotY*sinRotZ*sinRotX + totalZ*cosRotX*cosRotY) - totalZ);

// Calculated foot positions to acheive xlation/rotation input. Not coxa mounting angle corrected

tempFootPosX[legNum] = leg[legNum].initialFootPos.x + bodyRotOffsetX[legNum] - commanderInput.bodyTransX + leg[legNum].footPos.x;

tempFootPosY[legNum] = leg[legNum].initialFootPos.y + bodyRotOffsetY[legNum] - commanderInput.bodyTransY + leg[legNum].footPos.y;

tempFootPosZ[legNum] = leg[legNum].initialFootPos.z + bodyRotOffsetZ[legNum] - commanderInput.bodyTransZ + leg[legNum].footPos.z;

}

// Rotates X,Y about coxa to compensate for coxa mounting angles.

leg[0].footPosCalc.x = round( tempFootPosY[0]*cos(radians(COXA_ANGLE)) - tempFootPosX[0]*sin(radians(COXA_ANGLE)) );

leg[0].footPosCalc.y = round( tempFootPosY[0]*sin(radians(COXA_ANGLE)) + tempFootPosX[0]*cos(radians(COXA_ANGLE)) );

leg[0].footPosCalc.z = tempFootPosZ[0];

leg[1].footPosCalc.x = round( tempFootPosY[1]*cos(radians(COXA_ANGLE*2)) - tempFootPosX[1]*sin(radians(COXA_ANGLE*2)) );

leg[1].footPosCalc.y = round( tempFootPosY[1]*sin(radians(COXA_ANGLE*2)) + tempFootPosX[1]*cos(radians(COXA_ANGLE*2)) );

leg[1].footPosCalc.z = tempFootPosZ[1];

leg[2].footPosCalc.x = round( tempFootPosY[2]*cos(radians(COXA_ANGLE*3)) - tempFootPosX[2]*sin(radians(COXA_ANGLE*3)) );

leg[2].footPosCalc.y = round( tempFootPosY[2]*sin(radians(COXA_ANGLE*3)) + tempFootPosX[2]*cos(radians(COXA_ANGLE*3)) );

leg[2].footPosCalc.z = tempFootPosZ[2];

leg[3].footPosCalc.x = round( tempFootPosY[3]*cos(radians(COXA_ANGLE*5)) - tempFootPosX[3]*sin(radians(COXA_ANGLE*5)) );

leg[3].footPosCalc.y = round( tempFootPosY[3]*sin(radians(COXA_ANGLE*5)) + tempFootPosX[3]*cos(radians(COXA_ANGLE*5)) );

leg[3].footPosCalc.z = tempFootPosZ[3];

leg[4].footPosCalc.x = round( tempFootPosY[4]*cos(radians(COXA_ANGLE*6)) - tempFootPosX[4]*sin(radians(COXA_ANGLE*6)) );

leg[4].footPosCalc.y = round( tempFootPosY[4]*sin(radians(COXA_ANGLE*6)) + tempFootPosX[4]*cos(radians(COXA_ANGLE*6)) );

leg[4].footPosCalc.z = tempFootPosZ[4];

leg[5].footPosCalc.x = round( tempFootPosY[5]*cos(radians(COXA_ANGLE*7)) - tempFootPosX[5]*sin(radians(COXA_ANGLE*7)) );

leg[5].footPosCalc.y = round( tempFootPosY[5]*sin(radians(COXA_ANGLE*7)) + tempFootPosX[5]*cos(radians(COXA_ANGLE*7)) );

leg[5].footPosCalc.z = tempFootPosZ[5];

}

NOW it works!

<insert video here>

Head back to the beginning of Project B.E.T.H.

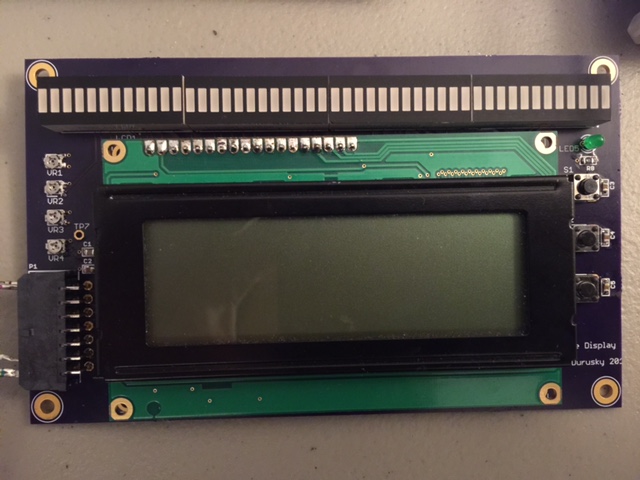

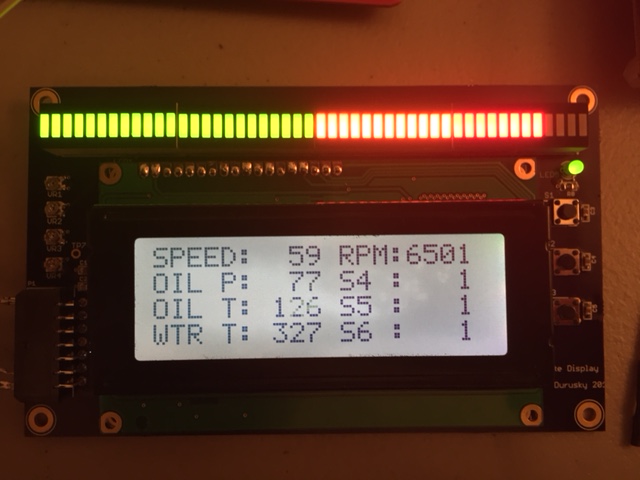

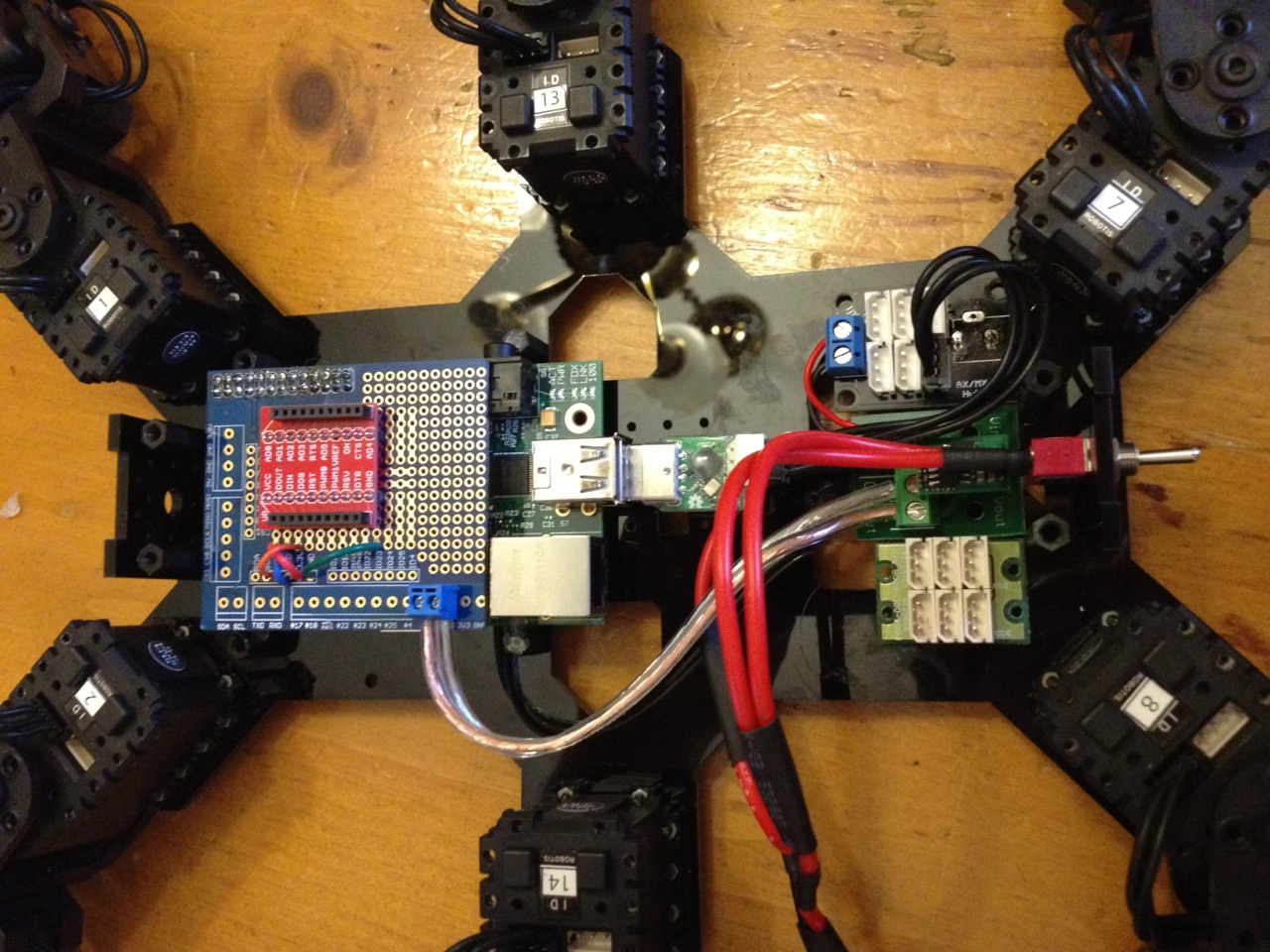

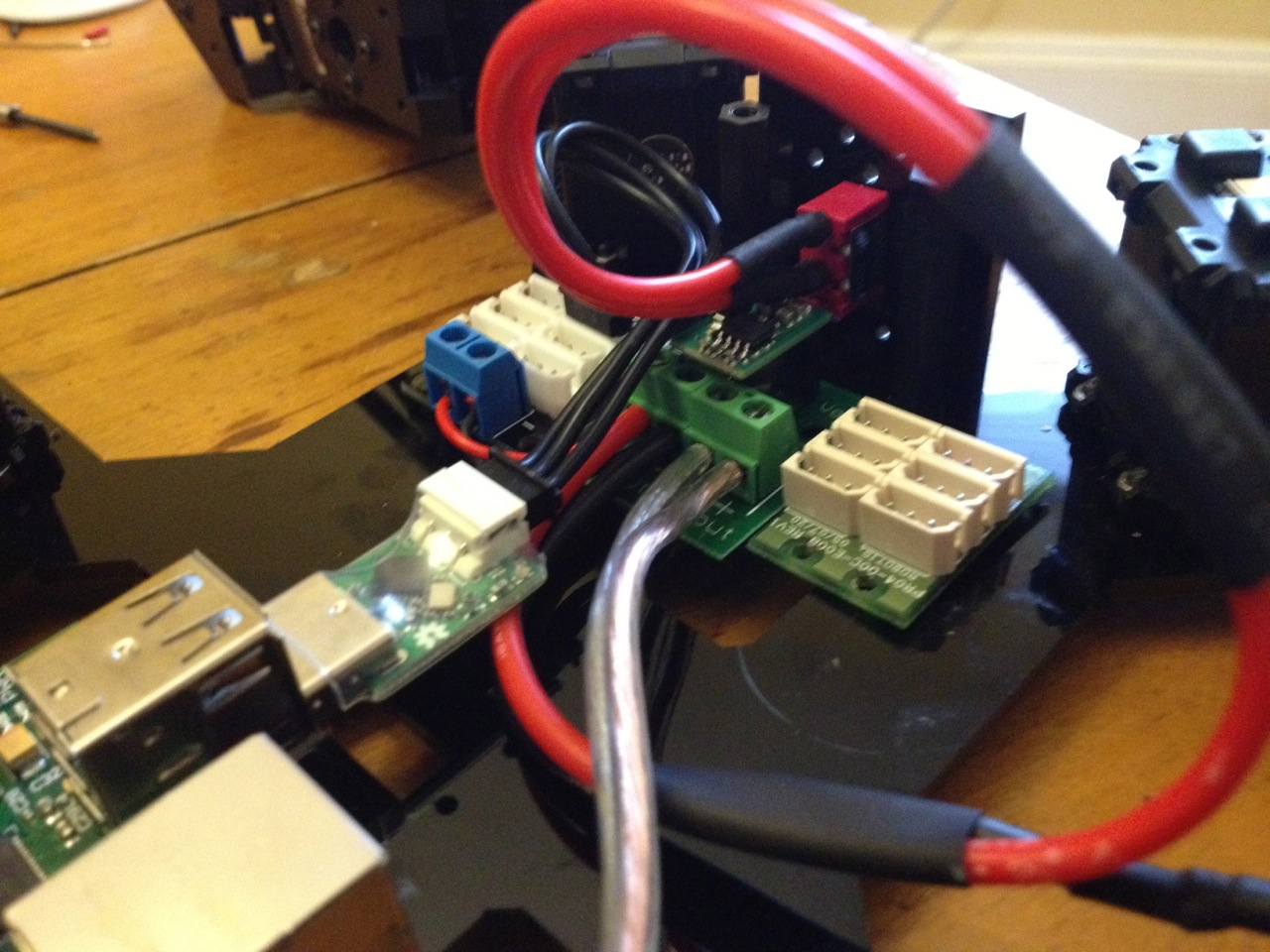

I had some time off for the holidays and managed to get one board built up.

I had some time off for the holidays and managed to get one board built up.